Using machine learning to tackle complex challenges

Prior to joining Element 84, our team at Azavea was always committed to pushing the boundaries of geospatial technology and machine learning to create innovative solutions for a variety of applications. As part of Element 84, a company that shares our passion for tackling complex geospatial challenges, we are excited to share our recent accomplishments and continue our work in this domain. There is a common theme that runs through all of our projects: our ability to adapt and develop cutting-edge techniques to address the unique challenges presented by geospatial data. We are committed to contributing to the advancement of geospatial technology and machine learning and look forward to working with the community to tackle new challenges in this field.

Our work spans a wide range of applications, from tree health monitoring and cloud detection to automated building footprint extraction and change detection. As you read about our diverse projects, you’ll notice not only the creativity and expertise of our team but also the versatility of the solutions we develop. Each project demonstrates our ability to tailor our approach to the specific needs of the application, be it harnessing the power of human-in-the-loop workflows or leveraging state-of-the-art neural network architectures like ResNet and FPN.

Moreover, our commitment to open-source technology and cloud-native geospatial workflows enables us to efficiently scale and customize our solutions, ensuring seamless integration with the evolving needs of Element 84’s customers. By staying at the forefront of technological advancements in geospatial machine learning, we are well-equipped to tackle even the most complex challenges faced by businesses today.

Raster Vision

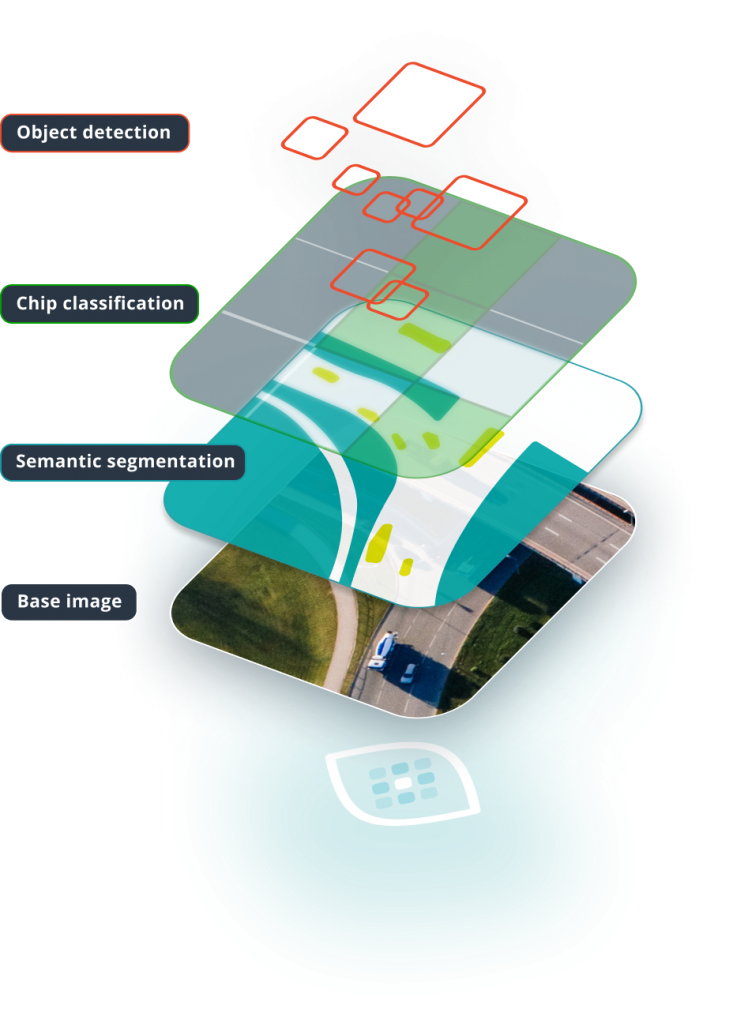

In the world of geospatial analysis, handling and processing large volumes of georeferenced raster and vector data is a significant challenge. The ability to extract valuable insights from this data is crucial for a wide range of industries, from agriculture to urban planning. As the creators of Raster Vision, we at Element 84 have developed a powerful framework for managing geospatial data and computer vision tasks. Our aim is to address this problem by providing a flexible, customizable solution. The recent release of Raster Vision 0.20 marks an important step forward in making geospatial data processing more accessible and efficient.

Raster Vision’s primary goal is to simplify the handling of geospatial data while enabling users to take advantage of cutting-edge machine learning techniques. With the 0.20 release, our team at Element 84 has transformed Raster Vision from a low-code framework to a library that can be used in Jupyter notebooks and combined with popular machine learning libraries. This shift allows users to break away from rigid pipeline structures, affording them more control over their projects and fostering innovation.

The 0.20 release also brings several notable improvements to Raster Vision’s feature set. Support for multiband imagery and external models has been extended to all computer vision tasks, including chip classification, object detection, and semantic segmentation. This flexibility enables users to work with a broader range of data sources and machine learning models. Furthermore, Raster Vision now offers improved data fusion capabilities, allowing the combination of raster data from multiple sources with different resolutions and extents. In addition, the release introduces cleaner semantic segmentation output by allowing users to discard edges of predicted chips and reduce boundary artifacts.

Raster Vision 0.20 presents an opportunity for our customers to streamline geospatial data processing and leverage the latest advances in machine learning. This release not only offers improved documentation, tutorials, and support for multiband imagery and external models, but also promises deeper integration with Spatio-Temporal Asset Catalog (STAC) and Element 84’s FilmDrop product. In summary, Raster Vision 0.20, developed by Element 84, is a valuable tool for organizations seeking to harness the power of geospatial data while maintaining flexibility and customization. To learn more about Raster Vision, visit the project website, the GitHub repository, and explore the documentation.

Change Detection with Raster Vision

Our Change Detection with Raster Vision work aimed to explore a direct classification approach to change detection using our open-source geospatial deep learning framework Raster Vision and the publicly available Onera Satellite Change Detection (OSCD) dataset. This work has significant applications in remote sensing for detecting deforestation, urban land use changes, and assessing building damage following disasters.

This work also provides a good demonstration of Raster Vision’s various data-handling and machine-learning capabilities. For example, we demonstrate how Raster Vision can be used to fuse together image bands from multiple sources while ensuring geospatial alignment; how pixel values can be normalized based on the statistics of each band; and how the loss function can be tweaked to handle class imbalances.

This capability has utility for Element 84’s customers who are interested in building or leveraging advanced models for change detection using open-source tools such as Raster Vision. The project’s outcomes can help improve our ability to monitor and respond to changes in the environment, urban development, and post-disaster recovery efforts.

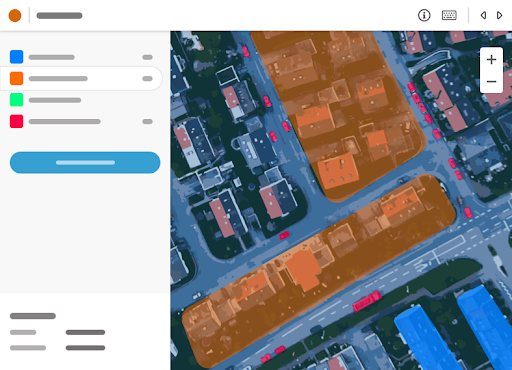

GroundWork

GroundWork enables the human-in-the-loop workflow discussed above, but it does much more. GroundWork is designed specifically for labeling satellite and aerial imagery, enabling users to retain crucial spatial context found within the image. With its intuitive interface and unique features, such as the “campaigns” feature, GroundWork streamlines the process of managing and labeling multiple images for machine learning training datasets. By incorporating GroundWork into their geospatial data workflows, Element 84’s customers can further enhance the efficiency of their data labeling and model training processes. For more information on GroundWork and its features, check out the official announcement and the campaigns feature announcement, or explore the GroundWork application directly.

Human-in-the-Loop

We have developed a human-in-the-loop (HITL) machine learning workflow for geospatial data and made it available as an experimental feature in GroundWork. Using a HITL workflow allows one to train a performant machine learning model using only a fraction of the labeled data that would otherwise be needed, thus greatly reducing labeling costs.

In a related blog, we describe in detail how this works under-the-hood and investigate various design choices such as uncertainty sampling, model training and fine-tuning, and aggregation techniques to understand the effectiveness of the HITL active learning workflow for geospatial data. We found that, depending on the nature of the scene being labeled, the HITL approach can significantly outperform a naive data-labeling approach for the same amount of labeling effort.

The HITL active learning workflow presented in this project can be utilized to improve the efficiency and effectiveness of data labeling and model performance for a range of geospatial data tasks. By incorporating the insights gained from this project, Element 84 customers can prioritize labeling efforts based on model weaknesses, reduce labeling costs, and enhance the overall performance of their geospatial data models.

Machine Learning Research & Development Projects

In the rapidly evolving field of machine learning, research and development projects play a crucial role in driving innovation and pushing the boundaries of what is possible. This section highlights two such cutting-edge projects, each demonstrating the transformative potential of machine learning in addressing real-world challenges.

Geospatial Time Series R&D Project

The Time Series Prediction R&D project aims to utilize time series of Sentinel-2 imagery to perform land-use/land-cover (LULC) tasks despite occlusions, such as clouds, and to detect LULC types with a seasonal component, like agriculture. Applications for this technology include general LULC, precision agriculture, and forest and agriculture inventorying (e.g., for carbon credits). Please stay tuned for a forthcoming dedicated blog on this project.

Although this project is ongoing, it has already demonstrated the ability to use an attention-based mechanism to ignore clouds and focus on informative images and parts of images. The project employs the machine learning technique called attention to automatically discard unhelpful images and parts of images (e.g., those covered by clouds) and focus on helpful areas.

The advancements made in the Geospatial Time Series R&D project allow Element 84 to deliver enhanced value to its customers through more efficient processing of satellite imagery data and reduced reliance on manual intervention. This technology enables the automatic recognition of clouds and cloud shadows, and the ability to automatically discard clouds, cloud shadows, and/or other occlusions and irrelevancies, significantly improving the quality of land-use/land-cover analysis. The attention-based classification model, and the cloud and cloud shadow model produced as a byproduct of this research, have the potential to enhance various applications such as precision agriculture, forest and agriculture inventorying, and general land-use/land-cover mapping. This capability provides Element 84’s customers with more accurate and efficient solutions for their geospatial needs.

Cicero NLP

The motivation behind the Cicero NLP project lies in the labor-intensive and expensive process of maintaining and updating the Cicero database, which contains information about elected officials. Automating this process could lead to significant cost savings and efficiency improvements. To achieve this, the project aims to leverage machine learning and natural language processing (NLP) techniques to extract relevant information from politicians’ websites, augmenting and maintaining the database in the process.

In order to address the problem, the goal of the Cicero NLP project is to accurately identify and extract information such as politician names, contact information, capital addresses, district addresses, and political affiliations from elected officials’ websites. The project focuses on using NLP within the named entity recognition paradigm to achieve this objective, which will ultimately help update and maintain the Cicero database.By training models on a diverse range of websites, the project aimed to overcome challenges such as variations in data quality and website formats. For more information on the project, its GitHub repository and an accompanying blog are available.

The project’s results demonstrate the effectiveness of machine learning and NLP technology in automating the process of updating and maintaining the Cicero database. The system’s ability to recognize relevant named entities from a variety of politicians’ websites highlights its potential. The Cicero NLP project not only paves the way for a more efficient database maintenance process but also opens up possibilities for developing geospatial software that leverages political-geographical information, or textual information more broadly, for various applications. These applications could include political trend analysis and targeted campaign material creation, further extending the impact of the project.

Small Business Innovation Research Grants

In this section, we explore two innovative projects supported by Small Business Innovation Research (SBIR) grants, which aim to address complex environmental challenges through the application of machine learning techniques. These projects demonstrate the potential of cutting-edge technologies to transform the way we understand and respond to our changing world. Additionally, the below projects highlight the value of interdisciplinary collaboration and the power of machine learning in tackling critical environmental issues.

NASA SBIR

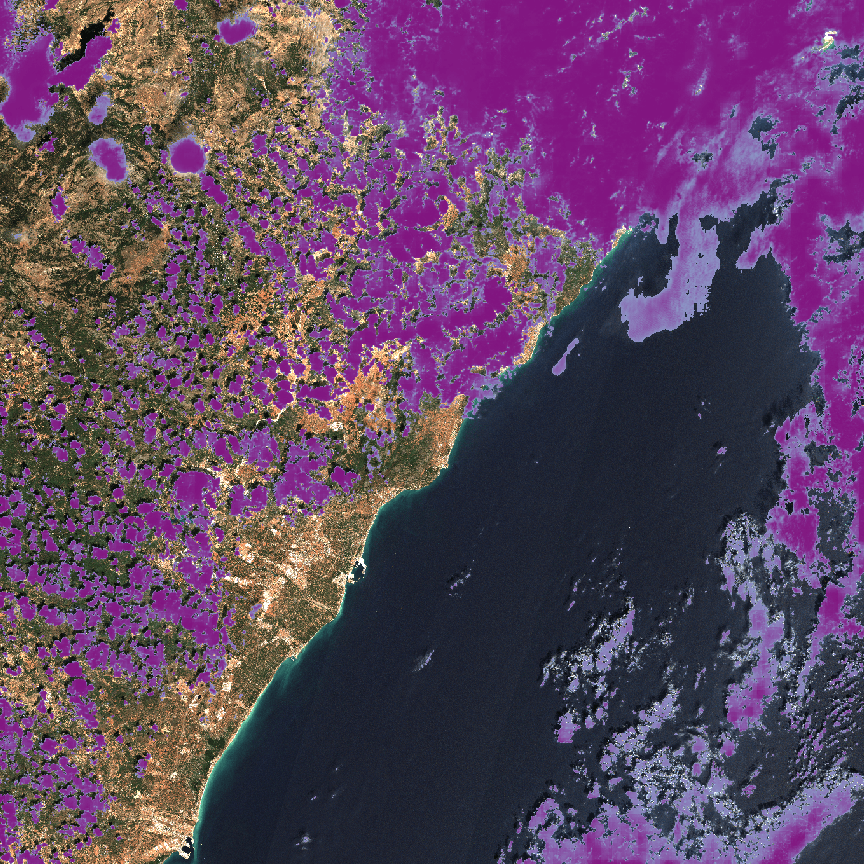

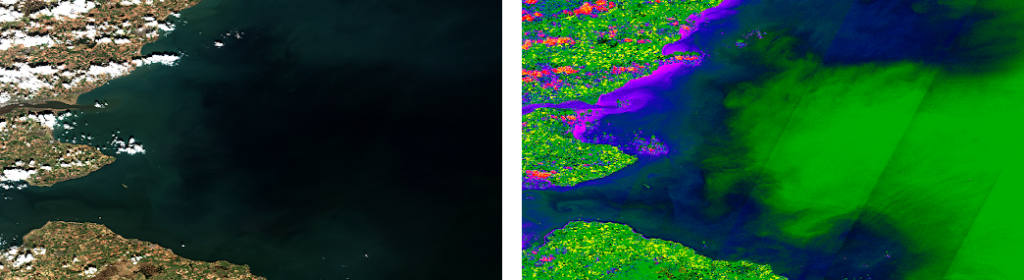

The NASA hyperspectral imagery (HSI) small business innovation research (SBIR) project aimed to develop technologies for indexing and querying hyperspectral imagery while identifying and exploring interesting machine learning problems in this emerging area. With many hyperspectral satellites expected to launch soon, the project focused on applications such as tree health (discriminating between healthy and unhealthy conifers using hyperspectral imagery and machine learning) and detecting algal blooms using hyperspectral imagery.

The project demonstrated convincing results in both tree health and algal bloom cases, using hyperspectral imagery and machine learning. However, it was also noted that problems requiring fine distinctions visible in HSI but not in multispectral imagery tend to require collaboration with subject matter experts due to the involved nuances. The associated GitHub repository is accessible to all.

A lighter weight model architecture developed at Element 84, CheapLab, was used in this project. This model architecture combines the best elements of traditional remote sensing indices and machine learning to provide high accuracy and fast runtime. Additionally, since the harmful algal bloom case involved point observations, the project explored using classified chips for training of segmentation models.

The knowledge and experience gained from the HSI project can bring significant value to Element 84’s customers. The project dealt with large datasets in and developed models for harmful algal blooms (HABs) that automatically learned to segment clouds, water, different land use types, sedimentary activity in water, and algal blooms in water. This expertise and experience in handling large datasets, particularly in HSI, is a valuable asset to our customers who face similar challenges in this field. By leveraging this knowledge and experience, our customers can make more informed decisions and gain a competitive advantage in their respective industries.

NOAA Flood Mapping SBIR

The NOAA SBIR Project on Flood Inundation Mapping aimed to use machine learning techniques on synthetic aperture radar (SAR) data, along with other datasets such as USFIMR, Sentinel-1, and topographic data, to accurately distinguish between permanent water and water due to flood events. The project’s primary goal was to improve flood prediction, preparation, and response efforts by providing accurate flood inundation maps that can help decision-makers allocate resources and prioritize actions.

To address this challenge, the project employed machine learning techniques, including convolutional neural networks (CNNs) for semantic segmentation tasks, to classify each pixel in the SAR images as either floodwater or not. Transfer learning techniques were employed to leverage existing pre-trained models. The team also used data augmentation techniques, such as rotation and flipping, to increase the size of the training dataset and improve the model’s performance. Various evaluation metrics, such as mean Intersection over Union (mIoU) and pixel accuracy, were used to assess the performance of the models.

Throughout this work, the team successfully combined innovative data processing techniques, careful model selection and tuning, and creative approaches to handling imbalanced data. The project’s outcomes showed that traditional vision models designed for optical imagery could be used with SAR and SAR+HAND data to accurately distinguish between permanent water and water due to flood events. Including HAND data in the training and inference set further improved the models’ results. These results can be used to develop geospatial software that accurately predicts and maps flood events, aiding in flood prediction and response efforts.

The NOAA SBIR Project on Flood Inundation Mapping demonstrated the potential of machine learning techniques, such as CNNs and transfer learning, to analyze SAR data and create accurate flood inundation maps. The project highlights those technique’s potential to contribute to more effective flood prediction and response efforts, ultimately benefiting communities at risk from flood events.

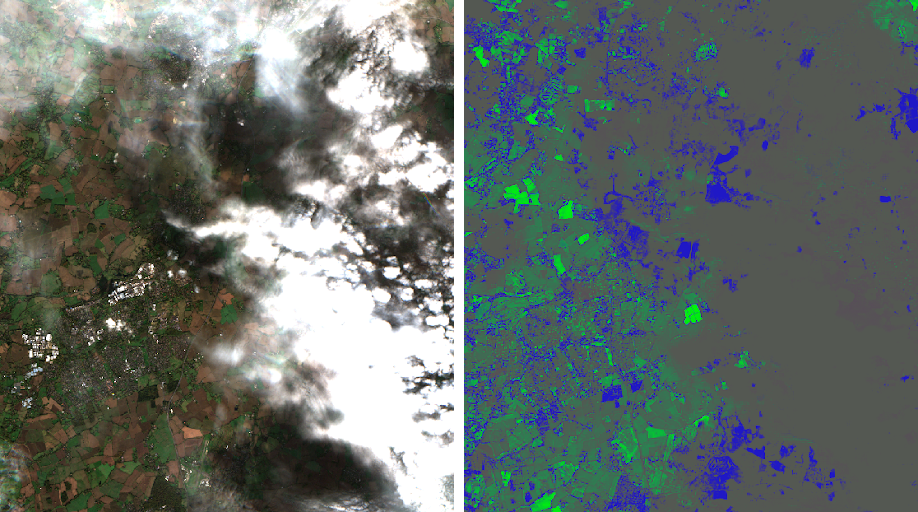

Cloud Detection Model

The field of geospatial machine learning has a strong need for efficient and accurate cloud detection in satellite imagery. Clouds can obscure important information, and detecting them is a crucial prerequisite for many applications that rely on optical satellite data. The Cloud Model project aims to address this challenge by providing a set of cloudy Sentinel-2 images with human-produced labels and models trained on this dataset. By achieving a greater than 0.8 F1 score while comfortably performing inference on a CPU, the project has the potential to improve the processing and analysis of satellite imagery in the industry.

The problem the Cloud Model project seeks to solve is the automatic detection and removal of clouds in satellite imagery. As part of the project, we developed two main capabilities to achieve this: a standard ResNet-18-based FPN for segmentation and a model based on the CheapLab architecture for segmentation. The former offers excellent performance, while the latter provides slightly inferior but still very good performance, but is suitable for use on cost-effective CPUs. Two blog posts were released in conjunction with the project: one announcing the models and another announcing the release of the dataset. The project’s GitHub repository is also publicly accessible.

The project addresses a core need in geospatial machine learning, where automatic detection of clouds is essential. Element84’s customers, who deal with large amounts of data, stand to benefit greatly from reduced human intervention in the cloud detection process. The Cloud Model contributes to more efficient processing and analysis of satellite imagery, allowing Element 84’s customers to make better-informed decisions based on their data. The two models, with F1 scores in the 0.9 and 0.8 range, respectively, can be applied to improve the processing and analysis of satellite imagery.

Building Footprint Detection

The accurate mapping of buildings is essential for urban planning, disaster response, and infrastructure development. In recent years, machine learning has been employed to extract building footprints from satellite and aerial imagery, offering a more efficient and precise approach to this task. A comprehensive overview of the various techniques and advancements in this domain can be found in a series of articles by Azavea: Part 1: Open Datasets, Part 2: Evaluation Metrics, and Part 3: Model Architectures.

The use of machine learning in building footprint extraction has primarily centered around semantic segmentation and instance segmentation. However, these methods often lack the precision required for cartographic applications. To address this issue, researchers have developed more sophisticated models, leveraging domain-specific knowledge to improve accuracy.

While advancements such as PolyMapper and Frame Field Learning offer considerable progress in addressing this issue, there are still limitations to overcome. For example, many models cannot handle shared walls between buildings or generate polygons with holes. As a result, further research and development are needed to refine these techniques and provide even greater accuracy for mapping applications.

For Element 84 and its customers, the survey of these techniques highlights the potential value of incorporating machine learning into mapping solutions. The continued improvement of building footprint extraction methods can lead to more accurate urban planning, infrastructure development, and disaster response capabilities. While no single method is perfect, the ongoing development of these techniques promises to improve the accuracy and utility of building data for a wide range of applications.

Mapping Africa

The Mapping Africa project set out to develop an open-source tool that could provide high-quality training data for machine learning algorithms to map agricultural fields in Africa using satellite imagery and crowdsourced annotations. The primary goal was to create accurate and high-resolution cropland maps for Ghana, with potential for extension to other African countries. This tool can assist researchers, governments, and organizations in understanding the distribution of agricultural land, supporting informed decision-making in areas such as food security and land use planning.

The project employed a range of techniques, including cloud-free mosaic creation, machine learning, crowdsourcing, Bayesian averaging, COG-oriented workflows, SparkML, and RasterFrames. By using crowdsourcing, the team gathered valuable human input to improve the accuracy of the final fitted models for identifying agricultural fields.

Ultimately, the project demonstrated the utility of open-source tools and cloud-native geospatial workflows in solving data disparities and creating accurate maps for developing regions. The outcomes of the Mapping Africa project showed that the combination of crowdsourcing and machine learning significantly improved the accuracy of the models used to identify agricultural fields. This accomplishment highlights the potential of leveraging human input and advanced technology to create precise, high-resolution cropland maps for regions that lack accurate and up-to-date data.

The Mapping Africa project successfully demonstrated the power of combining crowdsourced annotations with machine learning algorithms to generate high-quality training data for mapping agricultural fields in Africa. This innovative approach has the potential to contribute significantly to our understanding of land use patterns and agricultural productivity in developing regions, ultimately benefiting communities that rely on agriculture for their livelihoods and food security.

Philadelphia School Bus Routing

The Philadelphia School Bus Optimization Project was driven by the need to increase the efficiency of a subpart of the Philadelphia public school bus system, particularly for charter schools with students from across the city. Improving efficiency in this system could lead to cost savings, environmental benefits, and enhanced quality of student transportation through reduced ride times.

The project’s primary objective was to use road network data and pickup/dropoff locations provided by the city to model the problem as a variant of the Vehicle Routing Problem (VRP). By optimizing bus routes and reducing the number of buses needed, the project aimed to deliver tangible benefits to the city’s school transportation system.

To tackle this challenge, the project employed road network data, pickup/dropoff locations, and OptaPlanner, a constraint solver, to model and solve the VRP problem. The project faced obstacles such as data quality issues, modeling the problem as a VRP, and optimizing the VRP problem to achieve the desired outcomes.

The outcomes of the project were significant, leading to a 20% reduction in the number of buses needed, a 25% decrease in bus-miles driven, and a 10% reduction in both average and median student ride times. These results can be used to develop geospatial software that optimizes bus routes and reduces the number of buses needed for school transportation, further extending the project’s impact. The Philadelphia School Bus Optimization Project successfully demonstrated the potential of leveraging data and optimization techniques to bring about meaningful improvements in school transportation efficiency, cost savings, and environmental benefits.

The Future of Machine Learning at Element 84

Melding Azavea‘s extensive experience in geospatial machine learning and remote sensing with the Element 84 team is an exciting step forward. Our collaboration promises to deliver cutting-edge solutions that empower decision-makers to harness the full potential of geospatial data and drive their businesses forward. Our combined expertise will provide customers with state-of-the-art solutions to tackle complex geospatial challenges, empowering them to make better-informed decisions and drive innovation in the industry.